Schedule

Click on the text like “Week 1: Jan 20 – 24” to expand or collapse the items we covered in that week.

I will fill in more detail and provide links to lecture notes and labs as we go along. Items for future dates are tentative and subject to change.

Wed, Jan 22

- In class, we will work on:

- After class, please:

- Register for GitHub here if you haven’t already; I will ask you to provide your GitHub user name in the questionairre below.

- Fill out a brief questionnairre (if you are taking two classes with me, you only need to fill out this questionairre once)

- Fill out this brief poll about when my office hours should be held (if you are taking two classes with me, you only need to fill out this poll once)

- Sign up for our class at Piazza (anonymous question and answer forum): https://piazza.com/mtholyoke/spring2020/stat344ne

- Reading

- Chollet:

- Skim sections 1.1 – 1.3. This is pretty fluffy and we’ll mostly either skip it or talk about it in much more depth later, but it might be nice to see now for a little context.

- Read sections 2.1 – 2.3 more carefully. We will talk about this over the next day or two.

- Goodfellow et al.:

- Read the intro to section 5.5 (but don’t worry about KL divergence), read section 5.5.1, lightly skim section 6.1, read the first 3 paragraphs of section 6.2.1.1, and skim sections 6.2.2.1, 6.2.2.2, and 6.2.2.3. We will talk about this over the next day or two.

- Chollet:

- Videos

- I moved the videos that were here to later days.

- Homework 1

- Written part due 5pm Wed, Jan 29

- Coding part due 5 pm Fri, Jan 31

Fri, Jan 24

- In class, we will work on:

- Maximum likelihood and output activations for binary classification. I don’t have any lecture notes for this.

- Matrix formulation of calculations for logistic regression across multiple observations. I don’t have any lecture notes for this, but it’s written up in Lab 01.

- Highlights of NumPy: https://github.com/mhc-stat344ne-s2020/Python_NumPy_foundations/blob/master/Python.ipynb

- Lab 1: you do some calculations for logistic regression in NumPy. You should receive an email from GitHub about this.

- After class, please:

- Reading

- Continue/finish readings listed for Wed, Jan 22.

- Take a look at the NumPy document listed above. You can’t run it directly on GitHub, but if you want you could sign into colab.research.google.com and try out some of the code there. Also cross-reference this with the Numpy section in Chollet.

- Videos: Here are some videos of Andrew Ng talking about logistic regression and stuff we did today; you don’t need to watch these, but feel free if you want a review:

- Logistic regression set up: youtube

- Loss function just thrown out there without justification: youtube

- Discussing set up for loss function via maximum likelihood: youtube

- Start at thinking about “vectorization”, i.e. writing things in terms of matrix operations: youtube

- More on vectorization, but I think this video is more complicated than necessary and you might skip it: youtube

- Vectorizing logistic regression. Note that Andrew does this in the variant where your observations are in columns of the X matrix. I want us to understand that you can also just take the transpose of that and get a just-as-valid way of doing the computations, just sideways. This is worth understanding because different sources and different software packages will organize things different ways and you want mental flexibility. We talked about this in class but I don’t know of a video that explains things with the other orientation. youtube

- Broadcasting in NumPy – This is among my least favorite examples, apologies!

- Homework 1

- Written part due 5pm Wed, Jan 29

- Coding part due 5 pm Fri, Jan 31

- Reading

Mon, Jan 27

- In class, we will work on:

- Maximum likelihood and output activations for regression. Lecture notes: pdf

- Lab 1 about numpy calculations relevant to logistic regression – complete and turn in by Friday, Jan 31.

- After class, please:

- Homework 1

- Written part due 5pm Wed, Jan 29

- Coding part due 5 pm Fri, Jan 31

- Lab 1

- Due 5pm Fri, Jan 31

- Homework 1

Wed, Jan 29

- In class, we will work on:

- Overview/details of maximum likelihood for logistic regression. Demo visualization here.

- Overview/details of maximum likelihood for linear regression. Demo visualization here.

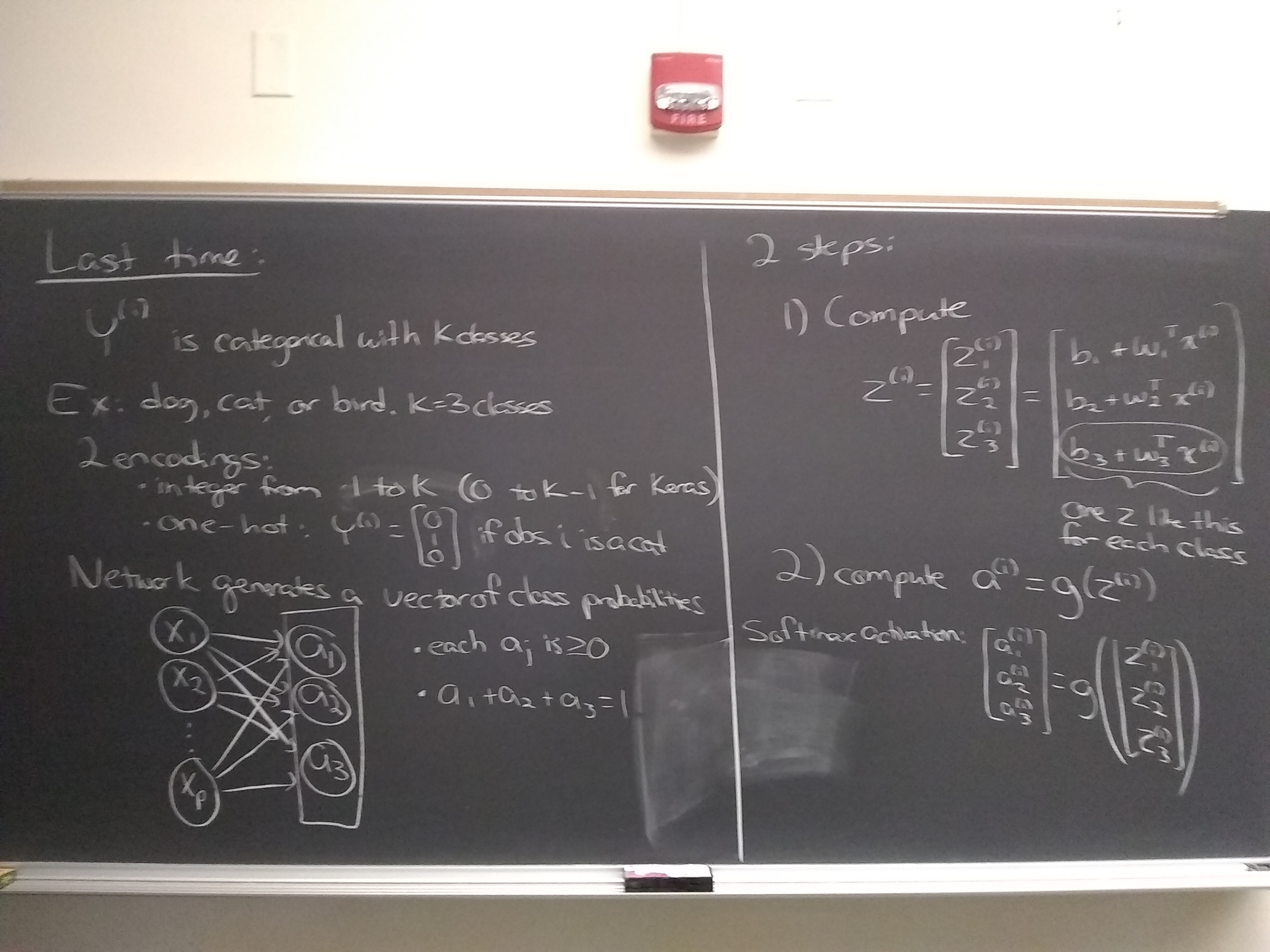

- Maximum likelihood and output activations for multi-class classification. No lecture notes, but see the video linked below.

- Summary of models, activation functions, and losses so far: pdf

- After class, please:

- Videos

- Some of you may find these videos helpful:

- Softmax regression is another word for multinomial regression, which is the equivalent to logistic regression for multi-class classification.

- Some of you may find these videos helpful:

- Homework 1

- Written part due 5pm today Wed, Jan 29

- Coding part due 5 pm Fri, Jan 31

- Lab 1

- Due 5pm Fri, Jan 31

- Videos

Fri, Jan 31

- In class, we will work on:

- Quiz on logistic regression

- Highlights from last class (see also first page of lecture notes below)

- More concrete example of calculations for multinomial logistic regression

- Lecture notes: pdf

- We also wrote out that for \(m\) observations, \[\begin{align*} z &= \begin{bmatrix} z^{(1)} & \cdots & z^{(m)} \end{bmatrix} = \begin{bmatrix} z^{(1)}_1 & \cdots & z^{(m)}_1 \\ z^{(1)}_2 & \cdots & z^{(m)}_2 \\ \vdots & \ddots & \vdots \\ z^{(1)}_K & \cdots & z^{(m)}_K \end{bmatrix} \\ &= \begin{bmatrix} b_1 + w_1^T x^{(1)} & \cdots & b_1 + w_1^T x^{(m)} \\ b_2 + w_2^T x^{(1)} & \cdots & b_2 + w_2^T x^{(m)} \\ \vdots & \ddots & \vdots \\ b_K + w_K^T x^{(1)} & \cdots & b_K + w_K^T x^{(m)} \end{bmatrix} \\ &= \begin{bmatrix} b_1 \\ b_2 \\ \vdots \\ b_K \end{bmatrix} + \begin{bmatrix} w_1^T \\ w_2^T \\ \vdots \\ w_K^T \end{bmatrix} \begin{bmatrix} x^{(1)} & x^{(2)} & \cdots & x^{(m)} \end{bmatrix} \end{align*}\] where the last equals sign uses Python broadcasting to pull out the vector \(b\).

- Hand out doing the calculations: pdf

- General Python stuff (also posted on resources page)

- After class, please:

- Homework 1

- Coding part due 5 pm today Fri, Jan 31

- Lab 1

- Due 5pm Fri, Jan 31

- Homework 2

- Coding part due 5pm Fri, Feb 7

- Videos

- Some of you may find this sequence of three videos introducing Keras helpful (note that I will follow the code in Chollet, which differs slightly from code used in these videos):

- Video 1: data set up

- Video 2: defining the network

- Video 3: training models

- Some of you may find this sequence of three videos introducing Keras helpful (note that I will follow the code in Chollet, which differs slightly from code used in these videos):

- Homework 1

Mon, Feb 03

- In class, we will work on:

- Hidden layers and forward propagation. Lecture notes: pdf. Note there is an error on the bottom of page 1: for multinomial regression, you use a softmax activation, not a sigmoid activation.

- Lab 02

- After class, please:

Wed, Feb 05

- In class, we will work on:

- Gradient Descent, start on backpropagation for logistic regression. Lecture notes: pdf. Note there is an error on page 6 that we did not make in class: \(dJdz = a - y\), not \(y - a\).

- After class, please:

- Videos

- Andrew Ng discusses Gradient Descent

- Andrew Ng discusses Derivatives in Computation Graphs. This video feels unnecessarily complicated to me, but you might find it helpful to see things worked out with actual numbers.

- Andrew Ng discusses Gradient Descent for Logistic Regression with 1 observation

- Andrew Ng discusses Gradient Descent for Logistic Regression with m observatons. But he uses a for loop that we really don’t want. He then gets rid of the for loop later, but I feel this is extra mental energy over what we did.

- Andrew Ng discusses Vectorizing Gradient Descent for Logistic Regression with m observatons. This is the matrix formulation that we want. Note that where we would write \(\frac{\partial J(b, w)}{\partial z}\)$, Andrew writes

dz

- Videos

Fri, Feb 07

Mon, Feb 10

- In class, we will work on:

- After class, please:

- Videos

- Andrew Ng discusses Gradient descent for neural networks, but he is inconsistent in his use of transposes for \(W\).

- Andrew Ng discusses “intuition” for backpropagation

- Videos

Wed, Feb 12

- In class, we will work on:

- Continue on backpropagation with hidden layers – notes posted Monday

- Start lab on backpropagation

- After class, please:

Fri, Feb 14

- In class, we will work on:

- Issues with gradient descent, learning rates, and stochastic gradient descent: pdf

- More time for lab

- After class, please:

- Videos:

- Andrew Ng discusses feature normalization

- Andrew Ng discusses stochastic gradient descent (which he calls mini-batch gradient descent)

- Andrew Ng discusses more about stochastic gradient descent

- Videos:

Mon, Feb 17

- In class, we will work on:

- After class, please:

- Homework 3 due 5pm Fri, Feb. 21

- Videos:

- Andrew Ng discusses Regularization

- Andrew Ng discusses intuition for why regularization works using ideas that are fairly different from my motivation.

- Reading:

- Chapter 4 of Chollet

- Section 7.1.1 of Goodfellow et al up through Equation 7.5.

Wed, Feb 19

- In class, we will work on:

- After class, please:

- Homework 3 due 5pm Fri, Feb. 21

- Videos:

- Andrew Ng discusses dropout regularization

- Andrew Ng discusses more about dropout

- Andrew Ng discusses vanishing and exploding gradients

- Andrew Ng discusses weight initialization

Fri, Feb 21

- In class, we will work on:

- Start on convolutional neural networks: pdf

- After class, please:

- Homework 3 due 5pm today Fri, Feb. 21

Mon, Feb 24

- In class, we will work on:

- After class, please:

- Homework 4 due 5pm Fri, Feb. 28

Wed, Feb 26

- In class, we will work on:

- Generators in Python, Data Augmentation for image data: pdf

- Lab on CNNs

- After class, please:

- Homework 4 due 5pm Fri, Feb. 28

Fri, Feb 28

Mon, Mar 09

- In class, we will work on:

- Lab 7 on RNN (Dinosaur names)

Wed, Mar 11

- In class, we will work on:

- Gated Recurrence Units (GRU) and Long Short-Term Memory Units (LSTM). Lecture notes: pdf

Fri, Mar 13

- In class, we will work on:

- Lab 8 on GRU and LSTM

Mon, Mar 16

- No Class: Midsemester Break. Safe travels!

Wed, Mar 18

- No Class: Midsemester Break. Safe travels!

Fri, Mar 20

- No Class: Midsemester Break. Safe travels!

Mon, Mar 23

- No Class: Midsemester Break. Safe travels!

Wed, Mar 25

- No Class: Midsemester Break. Safe travels!

Fri, Mar 27

- No Class: Midsemester Break. Safe travels!

Mon, Mar 30

- In class, we will work on:

- Interpretation of inner product as a measure of similarity between vectors

- Notes: pdf

- First high level, overview of word embeddings. Reminder of transfer learning, statement of limitations of one-hot encodings that are addressed by word embeddings. The goal for today is to get general ideas. We’ll develop more detailed understanding over the next few days.

- Notes: pdf

- The discussion of transfer learning I mentioned was on Friday Feb 28.

- In the video, I said we’d have a lab applying word embeddings to a classification problem, but I decided to make this lab shorter and more focused on just working with the actual word embedding vectors. We’ll do the application to classification next.

- Interpretation of inner product as a measure of similarity between vectors

- After class, please:

- Work on lab 9. Solutions are posted on the labs page.

Wed, Apr 01

- In class, we will work on:

- Embedding matrices

- Notes: pdf

- One more thing I wanted to say but forgot to say in the video: Although we think of the embedding matrix in terms of a dot product with a one-hot encoding vector, in practice one-hot encodings are very memory intensive so we don’t actually do that when we set it up in Keras. Instead, we use a sparse encoding. For example, if “movie” is word 17 in our vocabulary, then we just represent that word with the number 17 rather than a vector of length 10000 with a 1 in the 17th entry. This is much faster.

- Lab 10.

- Embedding matrices

- After class, please:

Fri, Apr 03

- In class, we will work on:

- Review of topics from linear algebra: vector addition, subtraction, and orthogonal prrojections.

- You only need to watch the first few minutes of this video; the rest is optional

- Notes: pdf

- Reducing bias in word embeddings.

- That was a lot of making videos and now I’m tired. Let’s do the lab on Monday.

- Review of topics from linear algebra: vector addition, subtraction, and orthogonal prrojections.

Mon, Apr 06

- In class, we will work on:

- No new lecture material today.

- Work on lab 11. Solutions are posted on the labs page.

Wed, Apr 08

- In class, we will work on:

Fri, Apr 10

- In class, we will work on:

- Batch Normalization:

- Notes: pdf

- Errors: At minute 25, the standard deviation is \(\gamma^{[l-1]}\), not \(\sqrt{\gamma^{[l-1]}}\)

- I’d like you to watch a few videos by Andrew Ng introducing methods for object localization. I think these videos are well done and I wouldn’t have much to add or do differently so it doesn’t make sense for me to reproduce them. I’ll try to sum up important points from these videos for Monday.

- Start on object localization. My only criticism of this video is that around minute 9, Andrew defines a loss function based on mean squared error. This is not really the right way to set it up; he briefly states the best way to do this later, but I will expand on this briefly on Monday.

- Landmark detection:

- Sliding windows:

- Convolutional implementation of sliding windows. I will expand on this in a separate video for Monday and in a lab, so don’t worry if this is confusing for now. (It’s still worth watching the video to get what you can out of it.)

- Batch Normalization:

Mon, Apr 13

- In class, we will work on:

- More on object detection with YOLO. I recommend you watch both the video I made and the videos from Andrew Ng linked to below. There is a lot of common material, but there are also things I covered that Andrew didn’t, and things he covered that I didn’t. I think it’s always nice to have two perspectives.

-

- Slides: pdf

- Notes: I want to note that although I have gone into a little more detail about the set up of the model architecture for YOLO than Andrew does, my presentation is still a simplification of the actual YOLO model in several ways. If you’re curious, the papers are at https://arxiv.org/pdf/1506.02640.pdf and https://arxiv.org/pdf/1612.08242.pdf

- After class, please:

Wed, Apr 15

- In class, we will work on:

- Last class I made one mistake and several intentional simplifications when discussing YOLO. Today, I want to fix the mistake and sketch a little more detail where I simplified things before.

- Correction about width and height of boxes:

- Slides: pdf

- More detail about parameterization of location

- Slides: pdf

- Training on multiple data sets; multi-label set up

- Slides: pdf

- Convolutional set up

- Slides: pdf

- Ethical considerations

- Here’s a sobering quote from the end of the third YOLO paper (which is written in informal style…):

- Please watch this video from New York Times:

- Please read this article about problems with using labelled image data without being very careful: https://www.nytimes.com/2019/09/20/arts/design/imagenet-trevor-paglen-ai-facial-recognition.html

- It’s very long, but you may be interested in checking out the website for the original project: https://www.excavating.ai/

- Here’s a sobering quote from the end of the third YOLO paper (which is written in informal style…):

Fri, Apr 17

- In class, we will work on:

- Lab 13 on YOLO

- After class, please:

Mon, Apr 20

- In class, we will work on:

Wed, Apr 22

- In class, we will work on:

- Keras backend API:

- Notebook on GitHub

- Lab on neural style transfer

- Keras backend API:

- After class, please:

Fri, Apr 24

Mon, Apr 27

- In class, we will work on:

- Lab 15

- After class, please:

- Finish the last HW, due tomorrow Tuesday April 28 at 5pm.

- Have a good summer! We’re done!

We will not have a final exam in this class.